Articles from Cerebras Systems

Cerebras Systems, in collaboration with Mayo Clinic, announced significant progress in developing artificial intelligence tools to advance patient care, today at the JP Morgan Healthcare Conference in San Francisco. Together, Cerebras and Mayo Clinic have developed a world-class genomic foundation model designed to support physicians and patients.

By Cerebras Systems · Via Business Wire · January 14, 2025

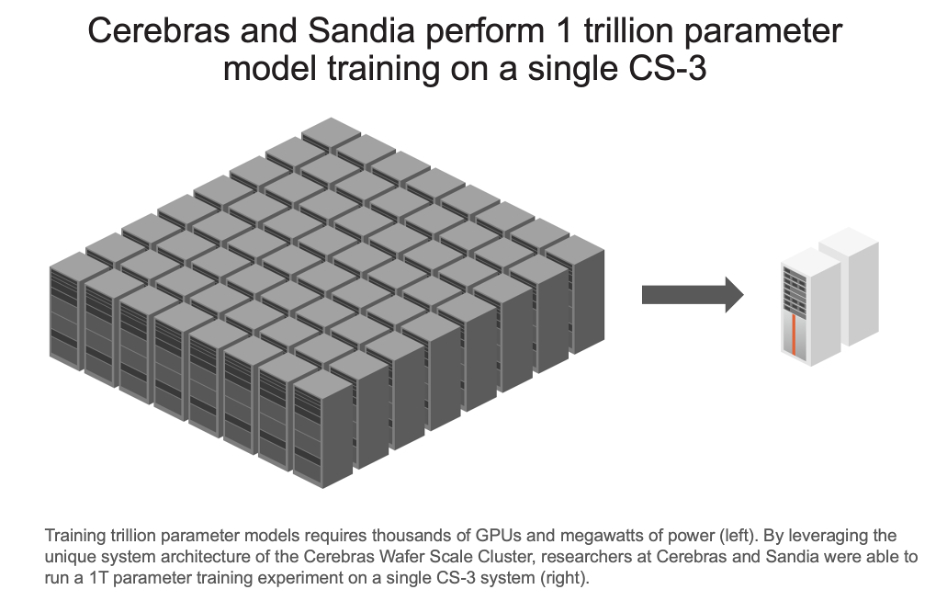

Today at NeurIPS 2024, Cerebras Systems, the pioneer in accelerating generative AI, today announced a groundbreaking achievement in collaboration with Sandia National Laboratories: successfully demonstrating training of a 1 trillion parameter AI model on a single CS-3 system. Trillion parameter models represent the state of the art in today’s LLMs, requiring thousands of GPUs and dozens of hardware experts to perform. By leveraging Cerebras’ Wafer Scale Cluster technology, researchers at Sandia were able to initiate training on a single AI accelerator – a one-of-a-kind achievement for frontier model development.

By Cerebras Systems · Via Business Wire · December 10, 2024

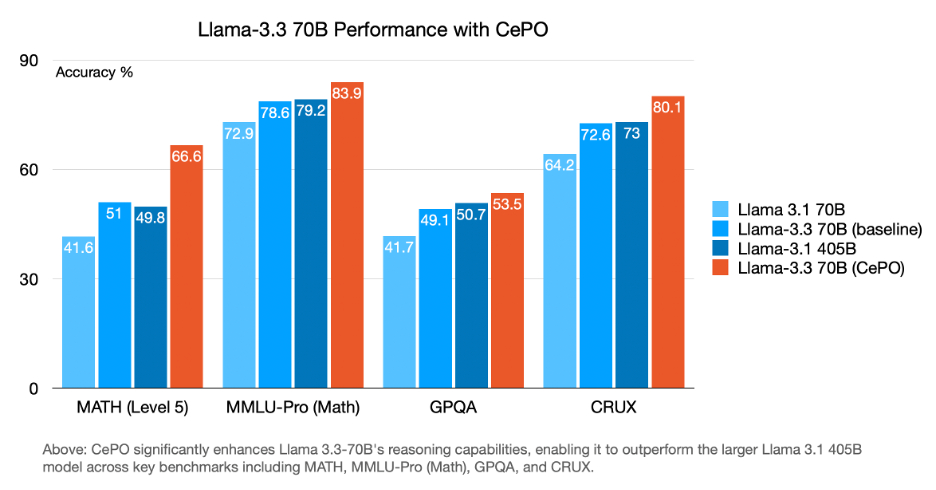

Today at NeurIPS 2024, Cerebras Systems, the pioneer in accelerating generative AI, announced CePO (Cerebras Planning and Optimization), a powerful framework that dramatically enhances the reasoning capabilities of Meta's Llama family of models. Through sophisticated test-time computation techniques, CePO enables Llama 3.3-70B to outperform Llama 3.1 405B across challenging benchmarks while maintaining interactive speeds of 100 tokens per second – a first among test-time reasoning models.

By Cerebras Systems · Via Business Wire · December 10, 2024

Cerebras Systems, the pioneer in accelerating generative AI, today announced the appointment of Thomas (Tom) Lantzsch as a new independent board member. Lantzsch is a seasoned technology executive with extensive experience in scaling AI-driven businesses and driving transformational growth in the technology sector.

By Cerebras Systems · Via Business Wire · November 22, 2024

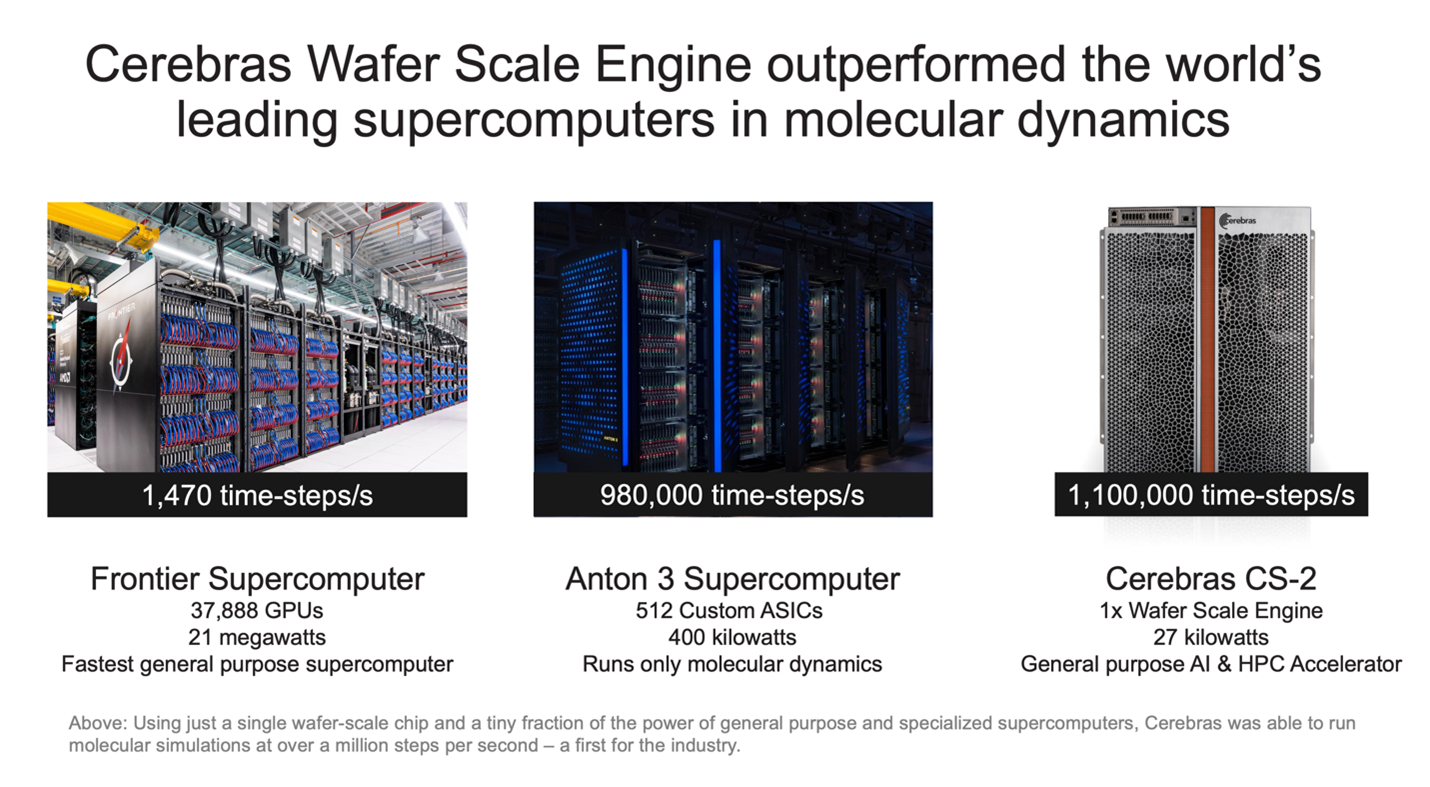

Cerebras Systems, the pioneer in accelerating generative AI, in collaboration with researchers from Sandia, Lawrence Livermore, and Los Alamos National Laboratories, have set another world record and important breakthrough in molecular dynamics (MD) simulations. For the first time in the history of the field, researchers achieved more than 1 million simulation steps per second. A single Cerebras Wafer Scale Engine achieved over 1.1 million steps per second, which is 748x faster than what is possible on the world's leading supercomputer 'Frontier'.

By Cerebras Systems · Via Business Wire · November 18, 2024

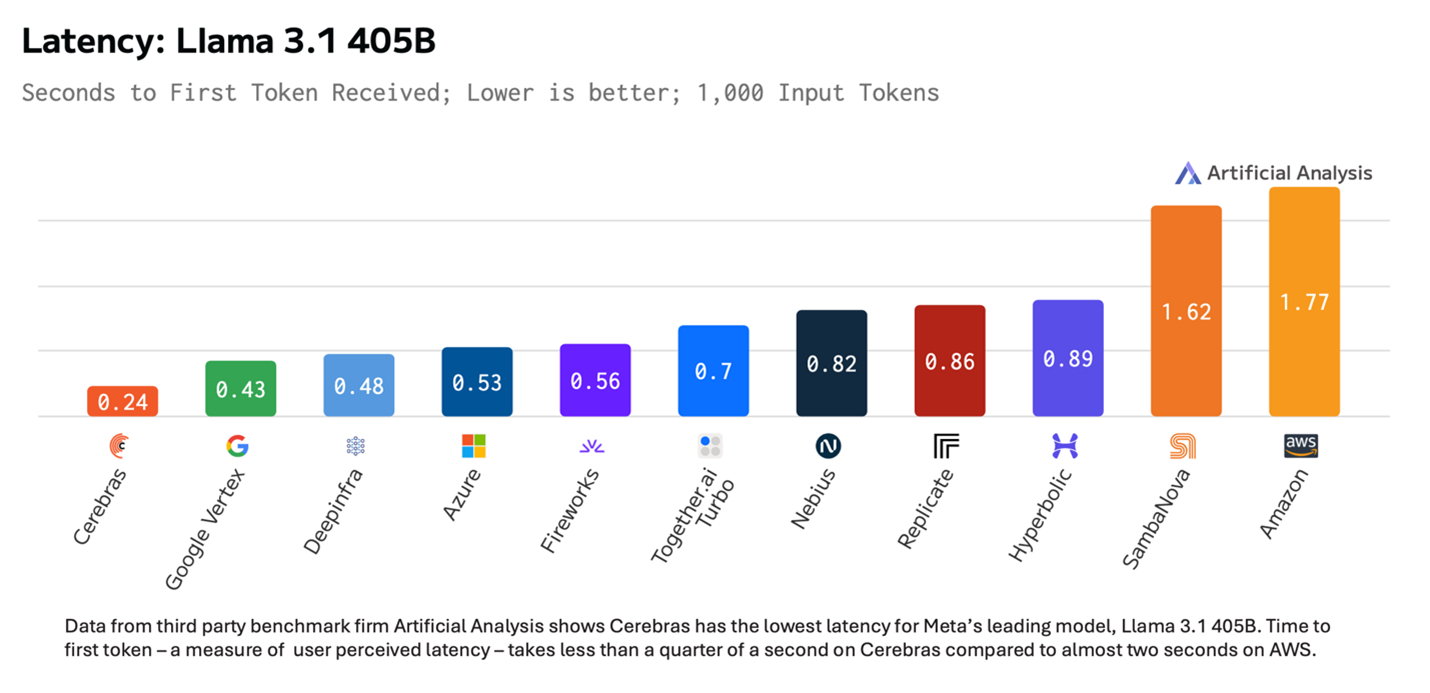

Cerebras Systems today announced that it has set a new performance record for Llama 3.1 405B – a leading frontier model released by Meta AI. Cerebras Inference generated 969 output tokens per second. Data from third party benchmark firm Artificial Analysis shows Cerebras up to 75 times faster than GPU based offerings from hyperscalers. Cerebras Inference is running multiple customer workloads on Llama’s 405B model at 128K full context length and 16-bit precision.

By Cerebras Systems · Via Business Wire · November 18, 2024

Today, Cerebras Systems, the pioneer in high performance AI compute, smashed its previous industry record for inference, delivering 2,100 tokens/second performance on Llama 3.2 70B. This is 16x faster than any known GPU solution and 68x faster than hyperscale clouds as measured by Artificial Analysis, a third-party benchmarking organization. Moreover, Cerebras Inference serves Llama 70B more than 8x faster than GPUs serve Llama 3B, delivering an aggregate 184x advantage (8x faster on models 23 x larger). By providing Instant Inference for large models, Cerebras is unlocking new AI use cases powered by real-time, higher quality responses, chain of thought reasoning, more interactions and higher user engagement.

By Cerebras Systems · Via Business Wire · October 24, 2024

Cerebras Systems (“Cerebras”) today announced that it has filed a registration statement on Form S-1 with the U.S. Securities and Exchange Commission (“SEC”) relating to a proposed initial public offering of its Class A common stock. The number of shares of Class A common stock to be offered and the price range for the proposed offering have not yet been determined. The offering is subject to market conditions, and there can be no assurance as to whether or when the offering may be completed, or as to the actual size or other terms of the offering.

By Cerebras Systems · Via Business Wire · September 30, 2024

Cerebras Systems today announced the signing of a Memorandum of Understanding (MoU) with Aramco, under which they aim to bring high performance AI inference to industries, universities, and enterprises in Saudi Arabia. Aramco plans to build, train, and deploy world-class large language models (LLMs) using Cerebras’ industry-leading CS-3 systems, in order to help accelerate AI innovation.

By Cerebras Systems · Via Business Wire · September 11, 2024

Today, Cerebras Systems, the pioneer in high performance AI compute, announced Cerebras Inference, the fastest AI inference solution in the world. Delivering 1,800 tokens per second for Llama 3.1 8B and 450 tokens per second for Llama 3.1 70B, Cerebras Inference is 20 times faster than NVIDIA GPU-based solutions in hyperscale clouds. Starting at just 10c per million tokens, Cerebras Inference is priced at a fraction of GPU solutions, providing 100x higher price-performance for AI workloads.

By Cerebras Systems · Via Business Wire · August 27, 2024

Cerebras Systems, a pioneer in accelerating generative AI, today announced the appointment of new independent Board members Glenda Dorchak, a former IBM, Intel and Spansion executive, and Paul Auvil, former CFO of VMWare and Proofpoint. Both industry veterans bring a range of corporate governance and technology expertise to Cerebras’ world-class team of AI leaders. Mr. Auvil will chair the Cerebras Audit Committee, and Ms. Dorchak will chair its Compensation Committee.

By Cerebras Systems · Via Business Wire · August 7, 2024

Cerebras Systems today announced that it has confidentially submitted a draft registration statement on Form S-1 with the U.S. Securities and Exchange Commission (“SEC”) relating to the proposed initial public offering of its common stock. The size and price range for the proposed offering have yet to be determined. The initial public offering is subject to market and other conditions and the completion of the SEC’s review process.

By Cerebras Systems · Via Business Wire · August 1, 2024

Cerebras Systems, a pioneer in accelerating generative artificial intelligence (AI), today announced a collaboration with Dell Technologies, to deliver groundbreaking AI compute infrastructure for generative AI. The collaboration combines best-of-breed technology from both companies to create an ideal solution designed for large-scale AI deployments.

By Cerebras Systems · Via Business Wire · June 12, 2024

Cerebras Systems, a pioneer in accelerating generative artificial intelligence (AI), today announced a multi-year partnership with world-leading AI company Aleph Alpha to develop secure sovereign AI solutions. The first deliverables of the collaboration will produce cutting-edge generative AI models trained to the industry’s highest degree of accuracy with BWI for the German Armed Forces.

By Cerebras Systems · Via Business Wire · May 15, 2024

Cerebras Systems, the pioneer in accelerating generative AI, and Neural Magic, a leader in high-performance enterprise inference servers, today announced the groundbreaking results of their collaboration for sparse training and deployment of large language models (LLMs). Achieving an unprecedented 70% parameter reduction with full accuracy recovery, training on Cerebras CS-3 systems and deploying on Neural Magic inference server solutions enables significantly faster, more efficient, and lower cost LLMs, making them accessible to a broader range of organizations and industries.

By Cerebras Systems · Via Business Wire · May 15, 2024

Cerebras Systems, the pioneer in accelerating generative AI, in collaboration with researchers from Sandia, Lawrence Livermore, and Los Alamos National Laboratories, have achieved an extraordinary breakthrough in molecular dynamics (MD) simulations. Using the second generation Cerebras Wafer Scale Engine (WSE-2), researchers were able to perform atomic scale simulations at the millisecond scale – 179x faster than what is possible on the world's leading supercomputer 'Frontier,' which is built with 39,000 GPUs.

By Cerebras Systems · Via Business Wire · May 15, 2024

Cerebras Systems, a pioneer in accelerating generative artificial intelligence (AI), today announced the company’s plans to deliver groundbreaking performance and value for production artificial intelligence (AI). By using Cerebras’ industry-leading CS-3 AI accelerators for training with the AI 100 Ultra, a product of Qualcomm Technologies, Inc., for inference, production grade deployments can realize up to a 10x price-performance improvement.

By Cerebras Systems · Via Business Wire · March 13, 2024

Cerebras Systems, the pioneer in accelerating generative AI, and G42, the Abu Dhabi-based leading technology holding group, today announced the build of Condor Galaxy 3 (CG-3), the third cluster of their constellation of AI supercomputers, the Condor Galaxy. Featuring 64 of Cerebras’ newly announced CS-3 systems – all powered by the industry’s fastest AI chip, the Wafer-Scale Engine 3 (WSE-3) – Condor Galaxy 3 will deliver 8 exaFLOPs of AI with 58 million AI-optimized cores.

By Cerebras Systems · Via Business Wire · March 13, 2024

Cerebras Systems, the pioneer in accelerating generative AI, has doubled down on its existing world record of fastest AI chip with the introduction of the Wafer-Scale Engine 3. The WSE-3 delivers twice the performance of the previous record-holder, the Cerebras WSE-2, at the same power draw and for the same price. Purpose built for training the industry’s largest AI models, the 5nm-based, 4 trillion transistor WSE-3 powers the Cerebras CS-3 AI supercomputer, delivering 125 petaflops of peak AI performance through 900,000 AI optimized compute cores.

By Cerebras Systems · Via Business Wire · March 13, 2024

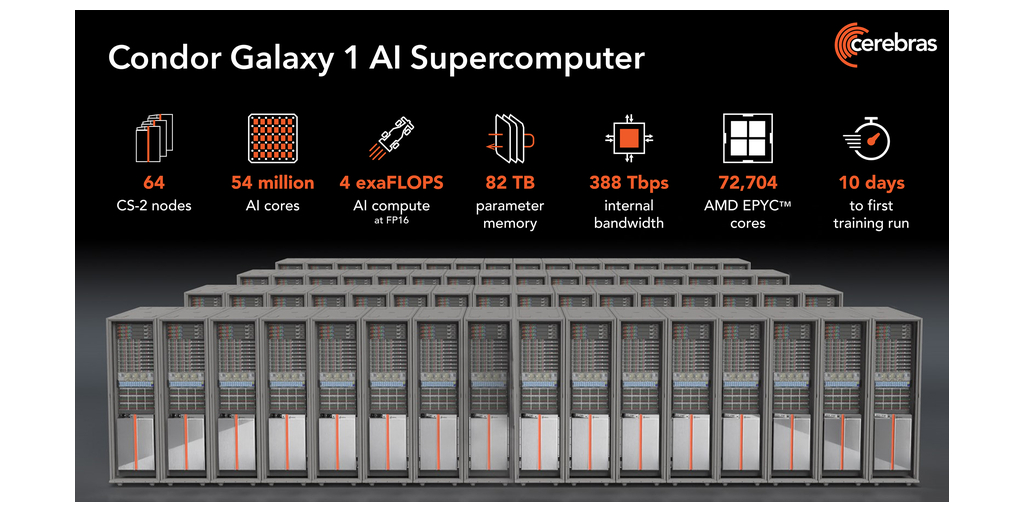

Cerebras Systems, the pioneer in accelerating generative AI, today announced that the Barcelona Supercomputing Center (BSC) has completed training FLOR-6.3B, the state-of-the-art English Spanish Calatan large language model. FLOR-6.3B was trained in just 2.5 days on Condor Galaxy (CG-1), the massive AI supercomputer built from 64 Cerebras CS-2s by Cerebras and G42. FLOR-6.3B continues Cerebras’ leading work on multilingual models, a trend that started with the introduction of Jais, the leading Arabic English model.

By Cerebras Systems · Via Business Wire · January 31, 2024

Cerebras Systems, a pioneer in accelerating generative AI, today announced a collaboration with Mayo Clinic as its first generative AI collaborator for the development of large language models (LLMs) for medical applications. Unveiled at the JP Morgan Healthcare Conference in San Francisco, the multi-year collaboration is already producing pioneering new LLMs to improve patient outcomes and diagnoses, leveraging Mayo Clinic’s robust longitudinal data repository and Cerebras’ industry-leading generative AI compute to accelerate breakthrough insights.

By Cerebras Systems · Via Business Wire · January 15, 2024

Cerebras Systems, the pioneer in accelerating generative AI, today announced the appointment of Shirley Li as General Counsel, reporting directly to CEO Andrew Feldman. With more than a decade of experience as an advocate and operator at leading technology companies, Li brings a wealth of business and legal experience to Cerebras.

By Cerebras Systems · Via Business Wire · January 4, 2024

Cerebras Systems, the pioneer in accelerating generative AI, and Petuum, the generative AI company focused on building transparent LLMs, in partnership with MBZUAI today launched CrystalCoder, a new 7 billion parameter model designed for English language and coding tasks. While previous models were suitable for either English or coding, CrystalCoder achieves high accuracy for both tasks simultaneously. Trained on Condor Galaxy 1, the AI supercomputer built by G42 and Cerebras, CrystalCoder-7B has been released under the new reproducible LLM360 methodology that promotes open source and transparent, responsible use. CrystalCoder and the LLM360 release methodology are available now on Hugging Face.

By Cerebras Systems · Via Business Wire · December 11, 2023

Cerebras Systems, the pioneer in accelerating generative AI, today announced that it has hired industry expert Julie Shin Choi as its Senior Vice President and Chief Marketing Officer. In this role, she will oversee global brand and communications, community engagement, demand generation and all go-to-market activities. Choi will also join the Cerebras executive team where she will help accelerate the growth and adoption of Cerebras technology into existing markets, as well as new ones.

By Cerebras Systems · Via Business Wire · November 29, 2023

Cerebras Systems, the pioneer in accelerating generative AI, and G42, the leading UAE-based technology holding group, today announced that the Condor Galaxy network of nine interconnected supercomputers has reached its second phase of build out. With the completion of Condor Galaxy 1, Cerebras and G42 have broken ground on the Condor Galaxy 2 (CG-2). CG-2 will be four exaFLOPs and 54 million AI-optimized compute cores, expanding the Condor Galaxy network to a total of eight exaFLOPs and 108 million cores upon completion. This represents an important milestone in Cerebras and G42’s plan to build a 36 exaFLOP constellation of AI supercomputers.

By Cerebras Systems · Via Business Wire · November 13, 2023

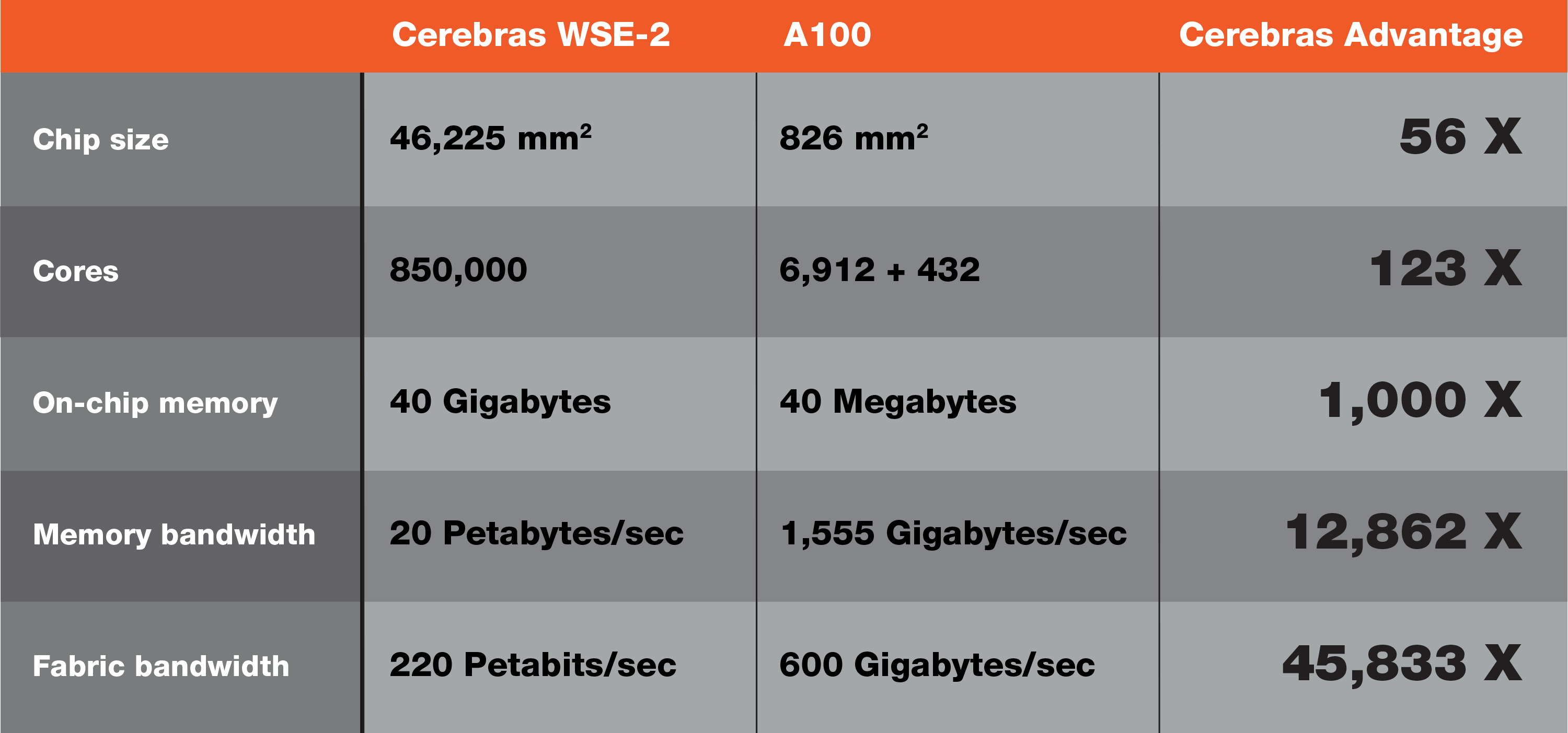

Cerebras Systems, the pioneer in accelerating generative AI, today announced the achievement of a 130x speedup over Nvidia A100 GPUs on a key nuclear energy HPC simulation kernel, developed by researchers at Argonne National Laboratory. This result demonstrates the performance and versatility of the Cerebras Wafer-Scale Engine (WSE-2) and ensures that the U.S. continues to be the global leader in supercomputing for energy and defense applications.

By Cerebras Systems · Via Business Wire · November 13, 2023

Cerebras Systems, the pioneer in accelerating generative AI, today announced that it has selected VAST Data, the AI data platform company, to help deliver advanced data solutions that will accelerate the training and accuracy of generative AI workloads. By leveraging the scalable and secure multi-tenant VAST Data Platform, Cerebras scales capacity and performance of its leading CS-2 AI supercomputers, including the recently announced Condor Galaxy, the world’s largest AI supercomputer network built in partnership with G42 Cloud, now part of Core42.

By Cerebras Systems · Via Business Wire · October 16, 2023

King Abdullah University of Science and Technology (KAUST), Saudi Arabia’s premier research university, and Cerebras Systems, the pioneer in accelerating generative AI, announced that its pioneering work on multi-dimensional seismic processing has been selected as a finalist for the 2023 Gordon Bell Prize, the most prestigious award for outstanding achievements in HPC. By developing a Tile Low-Rank Matrix-Vector Multiplications (TLR-MVM) kernel that takes full advantage of the unique architecture of the Cerebras CS-2 systems in the Condor Galaxy AI supercomputer, built by Cerebras and their strategic partner G42, researchers at KAUST and Cerebras achieved production-worthy accuracy for seismic applications with a record-breaking sustained bandwidth of 92.58 PB/s, highlighting how AI-customized architectures can enable a new generation of seismic algorithms.

By Cerebras Systems · Via Business Wire · September 20, 2023

Cerebras Systems, the pioneer in accelerating generative AI, today announced it has promoted Dhiraj Mallick to serve as the company’s chief operating officer (COO). Mallick joined Cerebras in 2018 and had previously been senior vice president of engineering and operations. In his expanded role as COO, Mallick will contribute heavily to the company strategy, create and drive operational vision, and streamline operations across business functions. He will also be responsible for the company’s operations and supply chain functions, including systems and hardware development, manufacturing and hardware engineering operations, foundry and supplier management, supply planning and logistics.

By Cerebras Systems · Via Business Wire · September 12, 2023

Cerebras Systems, the pioneer in accelerating generative AI, and G42, the UAE-based technology holding group, today announced Condor Galaxy, a network of nine interconnected supercomputers, offering a new approach to AI compute that promises to significantly reduce AI model training time. The first AI supercomputer on this network, Condor Galaxy 1 (CG-1), has 4 exaFLOPs and 54 million cores. Cerebras and G42 are planning to deploy two more such supercomputers, CG-2 and CG-3, in the U.S. in early 2024. With a planned capacity of 36 exaFLOPs in total, this unprecedented supercomputing network will revolutionize the advancement of AI globally.

By Cerebras Systems · Via Business Wire · July 20, 2023

Cerebras Systems, the pioneer in artificial intelligence (AI) compute for generative AI, today announced it has trained and is releasing a series of seven GPT-based large language models (LLMs) for open use by the research community. This is the first time a company has used non-GPU based AI systems to train LLMs up to 13 billion parameters and is sharing the models, weights, and training recipe via the industry standard Apache 2.0 license. All seven models were trained on the 16 CS-2 systems in the Cerebras Andromeda AI supercomputer.

By Cerebras Systems · Via Business Wire · March 28, 2023

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) compute, and Green AI Cloud, the most sustainable super compute platform in Europe, today announced the availability of Cerebras Cloud at Green AI. As the first cloud computing provider in Europe to offer the industry-leading CS-2 system, Green AI customers now can easily train GPT-class models much faster than traditional cloud service providers and with significantly less environmental impact.

By Cerebras Systems · Via Business Wire · December 14, 2022

Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, and Cirrascale Cloud Services®, a provider of deep learning infrastructure solutions for autonomous vehicle, NLP, and computer vision workflows, today announced the availability of the Cerebras AI Model Studio. Hosted on the Cerebras Cloud @ Cirrascale, this new offering enables customers to train generative Transformer (GPT)-class models, including GPT-J, GPT-3 and GPT-NeoX, on industry-leading Cerebras Wafer-Scale Clusters, including the newly announced Andromeda AI supercomputer.

By Cerebras Systems · Via Business Wire · November 29, 2022

Jasper, the category-leading AI content platform, and Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, today announced a partnership to accelerate adoption and improve the accuracy of generative AI across enterprise and consumer applications. Using Cerebras’ newly announced Andromeda AI supercomputer, Jasper can train its profoundly computationally intensive models in a fraction of the time and extend the reach of generative AI models to the masses.

By Cerebras Systems · Via Business Wire · November 29, 2022

Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, today unveiled Andromeda, a 13.5 million core AI supercomputer, now available and being used for commercial and academic work. Built with a cluster of 16 Cerebras CS-2 systems and leveraging Cerebras MemoryX and SwarmX technologies, Andromeda delivers more than 1 Exaflop of AI compute and 120 Petaflops of dense compute at 16-bit half precision. It is the only AI supercomputer to ever demonstrate near-perfect linear scaling on large language model workloads relying on simple data parallelism alone.

By Cerebras Systems · Via Business Wire · November 14, 2022

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) compute, today announced record-breaking performance on the scientific compute workload of forming and solving field equations. In collaboration with the Department of Energy’s National Energy Technology Laboratory (NETL), Cerebras demonstrated its CS-2 system, powered by the Wafer-Scale Engine (WSE), was as much as 470 times faster than NETL’s Joule Supercomputer in field equation modeling, delivering speeds beyond what either CPUs or GPUs are currently able to achieve.

By Cerebras Systems · Via Business Wire · November 10, 2022

Sandia National Laboratories and its partners announced a new project today to investigate the application of Cerebras Systems‘ Wafer-Scale Engine technology to accelerate advanced simulation and computing applications in support of the nation’s stockpile stewardship mission. The National Nuclear Security Administration’s Advanced Simulation and Computing program is sponsoring the work and Sandia, Lawrence Livermore and Los Alamos national labs will collaborate with Cerebras Systems on the project.

By Cerebras Systems · Via Business Wire · October 17, 2022

Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, today unveiled the Cerebras Wafer-Scale Cluster, delivering near-perfect linear scaling across hundreds of millions of AI-optimized compute cores while avoiding the pain of the distributed compute. With a Wafer-Scale Cluster, users can distribute even the largest language models from a Jupyter notebook running on a laptop with just a few keystrokes. This replaces months of painstaking work with clusters of graphics processing units (GPU).

By Cerebras Systems · Via Business Wire · September 14, 2022

Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, released yet another industry-first capability today. Customers can now rapidly train Transformer-style natural language AI models with 20x longer sequences than is possible using traditional computer hardware. This new capability is expected to lead to breakthroughs in natural language processing (NLP). By providing vastly more context to the understanding of a given word, phrase or strand of DNA, the long sequence length capability enables NLP models a much finer-grained understanding and better predictive accuracy.

By Cerebras Systems · Via Business Wire · August 31, 2022

Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, today announced its continued global expansion with the opening of a new India office in Bangalore, India. Led by industry veteran Lakshmi Ramachandran, the new engineering office will focus on accelerating R&D efforts and supporting local customers. With a target of more than sixty engineers by year end, and more than twenty currently employed, Cerebras is looking to rapidly build its presence in Bangalore.

By Cerebras Systems · Via Business Wire · August 29, 2022

Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, and the Computer History Museum (CHM), the leading institution decoding technology – its computing past, digital present, and future impact on humanity – today unveiled a new display featuring Cerebras’ Wafer-Scale Engine (WSE). As the largest computer chip in the world, roughly the size of a dinner plate, WSE-2 contains 2.6 trillion transistors, 850,000 AI optimized cores, and is optimized in every way for AI work.

By Cerebras Systems · Via Business Wire · August 3, 2022

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) computing, today announced, for the first time ever, the ability to train models with up to 20 billion parameters on a single CS-2 system – a feat not possible on any other single device. By enabling a single CS-2 to train these models, Cerebras reduces the system engineering time necessary to run large natural language processing (NLP) models from months to minutes. It also eliminates one of the most painful aspects of NLP — namely the partitioning of the model across hundreds or thousands of small graphics processing units (GPU).

By Cerebras Systems · Via Business Wire · June 22, 2022

At ISC 2022, Cerebras Systems, the pioneer in high performance artificial intelligence (AI) computing, shared news about their many supercomputing partners including European Parallel Computing Center (EPCC), Leibniz Supercomputing Centre (LRZ), Lawrence Livermore National Laboratory, Argonne National Laboratory (ANL), the National Center for Supercomputing Applications (NCSA), and the Pittsburgh Supercomputing Center (PSC).

By Cerebras Systems · Via Business Wire · May 31, 2022

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) computing, today announced that the National Center for Supercomputing Applications (NCSA) has deployed the Cerebras CS-2 system in their HOLL-I supercomputer.

By Cerebras Systems · Via Business Wire · May 31, 2022

The Leibniz Supercomputing Centre (LRZ), Cerebras Systems, and Hewlett Packard Enterprise (HPE), today announced the joint development and delivery of a new system featuring next-generation AI technologies to significantly accelerate scientific research and innovation in AI for Bavaria.

By Cerebras Systems · Via Business Wire · May 25, 2022

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) computing, received Bio-IT World Conference & Expo’s Best in Show award for the Cerebras CS-2 system, the world’s fastest AI solution. At the conference Cerebras also announced biopharmaceutical leader AbbVie as a customer, achieving 128 times the performance of graphics processing unit (GPU) on a single CS-2.

By Cerebras Systems · Via Business Wire · May 6, 2022

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) computing, and AbbVie, a global biopharmaceutical company, today announced a landmark achievement in AbbVie’s AI work. Using a Cerebras CS-2 on biomedical natural language processing (NLP) models, AbbVie achieved performance in excess of 128 times that of a graphics processing unit (GPU), while using 1/3 the energy. Not only did AbbVie train the models more quickly, and for less energy, due to the CS-2’s simple, standards-based programming workflow, the time usually allocated to model set up and tuning was also dramatically reduced.

By Cerebras Systems · Via Business Wire · May 5, 2022

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) computing, today released version 1.2 of the Cerebras Software Platform, CSoft, with expanded support for PyTorch and TensorFlow. In addition, customers can now quickly and easily train models with billions of parameters via Cerebras’ weight streaming technology.

By Cerebras Systems · Via Business Wire · April 13, 2022

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) compute, and nference, an AI-driven health technology company, today announced a collaboration to accelerate natural language processing (NLP) for biomedical research and development by orders of magnitude with a Cerebras CS-2 system installed at the nference headquarters in Cambridge, Mass.

By Cerebras Systems · Via Business Wire · March 14, 2022

Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, and G42, the leading UAE-based AI and cloud computing company, today announced at GMIS the signing of a memorandum of understanding (MOU) under which they will bring high performance AI capabilities to the Middle East. G42, who manages the region’s largest cloud computing infrastructure, will upgrade its technology stack with Cerebras’ industry-leading CS-2 systems to deliver unparallel AI compute capabilities to its partners and the broader ecosystem.

By Cerebras Systems · Via Business Wire · November 22, 2021

Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, today announced it has raised $250 million in a Series F financing round, valuing the company at over $4 billion. To-date, the company has raised $720 million. The Series F round was led by Alpha Wave Ventures, a global growth stage Falcon Edge | Chimera partnership, along with Abu Dhabi Growth Fund (ADG). Alpha Wave Ventures and ADG join a world class group of investors including Altimeter Capital, Benchmark Capital, Coatue Management, Eclipse Ventures, Moore Strategic Ventures, and VY Capital.

By Cerebras Systems · Via Business Wire · November 10, 2021

Cirrascale Cloud Services®, a provider of deep learning infrastructure solutions for autonomous vehicle, Natural Language Processing (NLP) and computer vision workflows, today announced the availability of its Cerebras Cloud @ Cirrascale platform. Delivering fast and flexible training with easy programming using standard ML frameworks and Cerebras’ software, the Cerebras Cloud @ Cirrascale brings the industry-leading performance of Cerebras’ Wafer-Scale Engine (WSE-2) deep learning processor to more users through Cirrascale’s proven scalable and accessible cloud service.

By Cerebras Systems · Via Business Wire · September 16, 2021

Cerebras Systems, the pioneer in innovative compute solutions for Artificial Intelligence (AI), today unveiled the world’s first brain-scale AI solution. The human brain contains on the order of 100 trillion synapses. The largest AI hardware clusters were on the order of 1% of human brain scale, or about 1 trillion synapse equivalents, called parameters. At only a fraction of full human brain-scale, these clusters of graphics processors consume acres of space and megawatts of power, and require dedicated teams to operate.

By Cerebras Systems · Via Business Wire · August 24, 2021

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) compute, and Peptilogics, a biotechnology platform company and an emerging leader in leveraging computation to design novel therapeutics, today announced a collaboration to accelerate the development cycle of peptide therapeutics through AI.

By Cerebras Systems · Via Business Wire · August 18, 2021

Tokyo Electron Device (TED) and Cerebras Systems, a company dedicated to accelerating Artificial Intelligence (AI) compute, today announced the opening of the TED AI Lab, featuring the industry-leading Cerebras Systems CS-1 AI accelerator. Designed to test, verify and support deep learning applications, including Natural Language Processing (NLP), the new TED AI Lab selected CS-1 in order to dramatically reduce training time for increasingly complex AI models.

By Cerebras Systems · Via Business Wire · July 20, 2021

Cerebras Systems, a company dedicated to accelerating Artificial Intelligence (AI) compute, today announced the appointment of Rebecca Boyden as Vice President and General Counsel. With more than 20 years of experience advising high-profile public companies and their Boards of Directors, Boyden brings a wealth of business, executive and legal experience to Cerebras.

By Cerebras Systems · Via Business Wire · June 28, 2021

Cerebras Systems, a company dedicated to accelerating Artificial Intelligence (AI) compute, today unveiled the largest AI processor ever made, the Wafer Scale Engine 2 (WSE-2). Custom-built for AI work, the 7nm-based WSE-2 delivers a massive leap forward for AI compute, crushing Cerebras’ previous world record with a single chip that boasts 2.6 trillion transistors and 850,000 AI optimized cores. By comparison the largest graphics processor unit (GPU) has only 54 billion transistors – 2.55 trillion fewer transistors than the WSE-2. The WSE-2 also has 123x more cores and 1,000x more high performance on-chip high memory than GPU competitors.

By Cerebras Systems · Via Business Wire · April 20, 2021

Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, today announced the appointment of Rupal Shah Hollenbeck as Vice President and Chief Marketing Officer (CMO). With more than 25 years of high-tech experience supporting S&P 500 companies, including senior positions at Intel and Oracle, Hollenbeck will lead Cerebras’ global marketing organization, including the company’s growth and expansion into new markets.

By Cerebras Systems · Via Business Wire · April 13, 2021